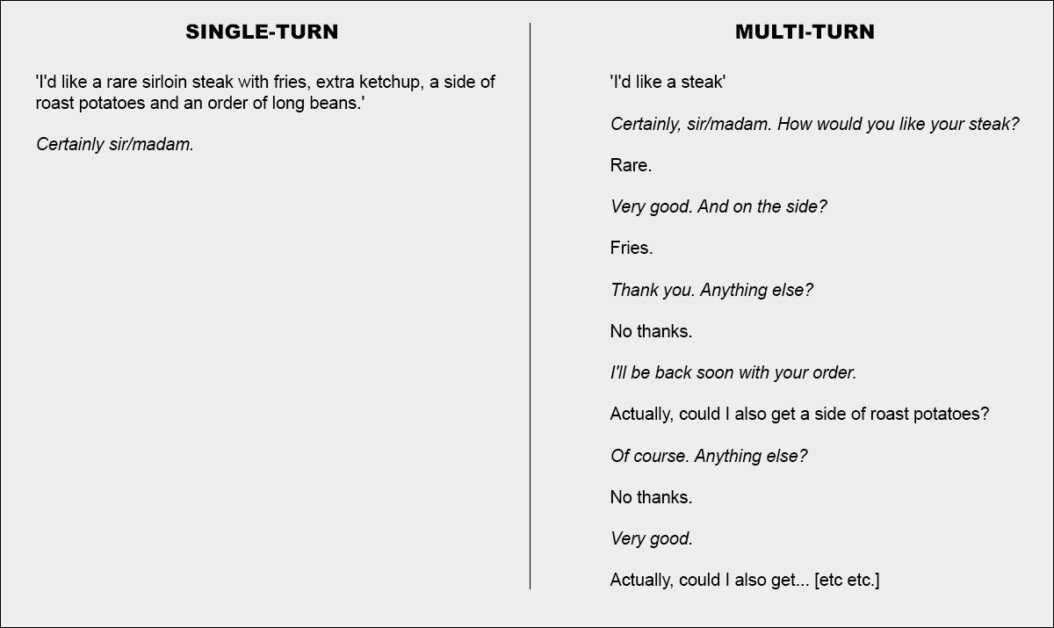

A new paper from Microsoft Research and Salesforce finds that even the most capable Large Language Models (LLMs) fall apart when instructions are given in stages rather than all at once. The authors found that performance drops by an average of 39 percent across six tasks when a prompt is split over multiple turns:

A single turn conversation (left) obtains the best results, but is unnatural for the end-user. A multi-turn conversation (right) finds even the highest-ranked and most performant LLMs losing the effective impetus in a conversation. Source: https://arxiv.org/pdf/2505.06120

More strikingly, the reliability of responses takes a nosedive, with prestigious models such as ChatGPT-4.1 and Gemini 2.5 Pro swinging between near-perfect answers and manifest failures, depending on how the same task is phrased; further, output consistency can drop by more than half in the process.

To explore this behavior, the paper introduces a method called sharding*, which splits fully-specified prompts into smaller fragments and releases them one at a time into a conversation.

In the most basic terms, this is equivalent to giving a cohesive and comprehensive single order at a restaurant, leaving the waiter with nothing to do but acknowledge the request; or else deciding to attack the matter collaboratively:

Two extreme versions of a restaurant conversation (not from the new paper, for illustrative purposes only).

For emphasis, the example above perhaps puts the customer in a negative light. But the core idea depicted in the second column is that of a transactional exchange that clarifies a problem-set, prior to addressing the problems – apparently a rational and reasonable way of approaching a task.

This setup is reflected in the new work’s drip-fed, sharded approach to LLM interaction. The authors note that LLMs often generate overly long responses and then continue to rely on their own insights even after those insights have been shown to be incorrect, or irrelevant. This tendency, combined with other factors, can cause the system to lose track of the exchange entirely.

In fact, the researchers note what many of us have found anecdotally – that the best way to get the conversation back on track is to start a new conversation with the LLM.

‘If a conversation with an LLM did not lead to expected outcomes, starting a new conversation that repeats the same information might yield significantly better outcomes than continuing an ongoing conversation.

‘This is because current LLMs can get lost in the conversation, and our experiments show that persisting in a conversation with the model is ineffective. In addition, since LLMs generate text with randomness, a new conversation may lead to improved outcomes.’

The authors acknowledge that agentic systems such as Autogen or LangChain can potentially improve the outcomes by acting as interpretative layers between the end-user and the LLM, only communicating with the LLM when they have gathered enough ‘sharded’ responses to coagulate into a single cohesive query (which the end-user will not be exposed to).

However, the authors contend that a separate abstraction layer should not be necessary, or else be built directly into the source LLM:

‘An argument could be made that multi-turn capabilities are not a necessary feature of LLMs, as it can be offloaded to the agent framework. In other words, do we need native multi-turn support in LLMs when an agent framework can orchestrate interactions with users and leverage LLMs only as single-turn operators?…’

But having tested the proposition across their array of examples, they conclude:

‘[Relying] on an agent-like framework to process information might be limiting, and we argue LLMs should natively support multi-turn interaction’

This interesting new paper is titled LLMs Get Lost In Multi-Turn Conversation, and comes from four researchers across MS Research and Salesforce,

Fragmented Conversations

The new method first breaks down conventional single-turn instructions into smaller shards, designed to be introduced at key moments during an LLM interaction, a structure that reflects the exploratory, back-and-forth style of engagement seen in systems such as ChatGPT or Google Gemini.

Each original instruction is a single, self-contained prompt that delivers the entire task in one go, combining a high-level question, supporting context, and any relevant conditions. The sharded version breaks this into multiple smaller parts, with each shard adding just one piece of information:

Paired instructions showing (a) a complete prompt delivered in a single turn and (b) its sharded version used to simulate an underspecified, multi-turn interaction. Semantically, each version delivers the same informational payload.

The first shard always introduces the main goal of the task, while the rest provide clarifying details. Together, they deliver the same content as the original prompt, but spread out naturally over several turns in the conversation.

Each simulated conversation unfolds between three components: the assistant, the model under evaluation; the user, a simulated agent with access to the full instruction in sharded form; and the system, which invigilates and scores the exchange.

The conversation begins with the user revealing the first shard and the assistant replying freely. The system then classifies that response into one of several categories, such as a clarification request or a full answer attempt.

If the model does attempt an answer, a separate component extracts just the relevant span for evaluation, ignoring any surrounding text. On each new turn, the user reveals one additional shard, prompting another response. The exchange continues until either the model gets the answer right or there are no shards left to reveal:

Diagram of a sharded conversation simulation, with the evaluated model highlighted in red.

Early tests showed that models often asked about information that hadn’t been shared yet, so the authors dropped the idea of revealing shards in a fixed order. Instead, a simulator was used to decide which shard to reveal next, based on how the conversation was going.

The user simulator, implemented using GPT-4o-mini, was therefore given full access to both the entire instruction and the conversation history, tasked with deciding, at each turn, which shard to reveal next, based on how the exchange was unfolding.

The user simulator also rephrased each shard to maintain conversational flow, without altering the meaning. This allowed the simulation to reflect the ‘give-and-take’ of real dialogue, while preserving control over the task structure.

Before the conversation begins, the assistant is given only the basic information needed to complete the task, such as a database schema or an API reference. It is not told that the instructions will be broken up, and it is not guided toward any specific way of handling the conversation. This is done on purpose: in real-world use, models are almost never told that a prompt will be incomplete or updated over time, and leaving out this context helps the simulation reflect how the model behaves in a more realistic context.

GPT-4o-mini was also used to decide how the model’s replies should be classified, and to pull out any final answers from those replies. This helped the simulation stay flexible, but did introduce occasional mistakes: however, after checking several hundred conversations by hand, the authors found that fewer than five percent had any problems, and fewer than two percent showed a change in outcome because of them, and they considered this a low enough error rate within the parameters of the project.

Simulation Scenarios

The authors used five types of simulation to test model behavior under different conditions, each a variation on how and when parts of the instruction are revealed.

In the Full setting, the model receives the entire instruction in a single turn. This represents the standard benchmark format and serves as the performance baseline.

The Sharded setting breaks the instruction into multiple pieces and delivers them one at a time, simulating a more realistic, underspecified conversation. This is the main setting used to test how well models handle multi-turn input.

In the Concat setting, the shards are stitched back together as a single list, preserving their wording but removing the turn-by-turn structure. This helps isolate the effects of conversational fragmentation from rephrasing or content loss.

The Recap setting runs like Sharded, but adds a final turn where all previous shards are restated before the model gives a final answer. This tests whether a summary prompt can help recover lost context.

Finally, Snowball goes further, by repeating all prior shards on every turn, keeping the full instruction visible as the conversation unfolds – and offering a more forgiving test of multi-turn ability.

Simulation types based on sharded instructions. A fully-specified prompt is split into smaller parts, which can then be used to simulate either single-turn (Full, Concat) or multi-turn (Sharded, Recap, Snowball) conversations, depending on how quickly the information is revealed.

Tasks and Metrics

Six generation tasks were chosen to cover both programming and natural language domains: code generation prompts were taken from HumanEval and LiveCodeBench; Text-to-SQL queries were sourced from Spider; API calls were constructed using data from the Berkeley Function Calling Leaderboard; elementary math problems were provided by GSM8K; tabular captioning tasks were based on ToTTo; and Multi-document summaries were drawn from the Summary of a Haystack dataset.

Model performance was measured using three core metrics: average performance, aptitude, and unreliability.

Average performance captured how well a model did overall across multiple attempts; aptitude reflected the best results a model could reach, based on its top-scoring outputs; and unreliability measured how much those results varied, with larger gaps between best and worst outcomes indicating less stable behavior.

All scores were placed on a 0-100 scale to ensure consistency across tasks, and metrics computed for each instruction – and then averaged to provide an overall picture of model performance.

Six sharded tasks used in the experiments, covering both programming and natural language generation. Each task is shown with a fully-specified instruction and its sharded version. Between 90 and 120 instructions were adapted from established benchmarks for each task.

Contenders and Tests

In the initial simulations (with an estimated cost of $5000), 600 instructions spanning six tasks were sharded and used to simulate three conversation types: full, concat, and sharded. For each combination of model, instruction, and simulation type, ten conversations were run, producing over 200,000 simulations in total – a schema that made it possible to capture both overall performance and deeper measures of aptitude and reliability.

Fifteen models were tested, spanning a wide range of providers and architectures: the OpenAI models GPT-4o (version 2024-11-20), GPT-4o-mini (2024-07-18), GPT-4.1 (2025-04-14), and the thinking model o3 (2025-04-16).

Anthropic models were Claude 3 Haiku (2024-03-07) and Claude 3.7 Sonnet (2025-02-19), accessed via Amazon Bedrock.

Google contributed Gemini 2.5 Flash (preview-04-17) and Gemini 2.5 Pro (preview-03-25). Meta models were Llama 3.1-8B-Instruct and Llama 3.3-70B-Instruct, as well as Llama 4 Scout-17B-16E, via Together AI.

The other entries were OLMo 2 13B, Phi-4, and Command-A, all accessed locally via Ollama or Cohere API; and Deepseek-R1, accessed through Amazon Bedrock.

For the two ‘thinking’ models (o3 and R1), token limits were raised to 10,000 to accommodate longer reasoning chains:

Average performance scores for each model across six tasks: code, database, actions, data-to-text, math, and summary. Results are shown for three simulation types: full, concat, and sharded. Models are ordered by their average full-setting score. Shading reflects the degree of performance drop from the full setting, with the final two columns reporting average declines for concat and sharded relative to full.

Regarding these results, the authors state†:

‘At a high level, every model sees its performance degrade on every task when comparing FULL and SHARDED performance, with an average degradation of -39%. We name this phenomenon Lost in Conversation: models that achieve stellar (90%+) performance in the lab-like setting of fully-specified, single-turn conversation struggle on the exact same tasks in a more realistic setting when the conversation is underspecified and multi-turn.’

Concat scores averaged 95 percent of full, indicating that the performance drop in the sharded setting cannot be explained by information loss. Smaller models such as Llama3.1-8B-Instruct, OLMo-2-13B, and Claude 3 Haiku showed more pronounced degradation under concat, suggesting that smaller models are generally less robust to rephrasing than larger ones.

The authors observe†:

‘Surprisingly, more performant models (Claude 3.7 Sonnet, Gemini 2.5, GPT-4.1) get equally lost in conversation compared to smaller models (Llama3.1-8B-Instruct, Phi-4), with average degradations of 30-40%. This is in part due to metric definitions. Since smaller models achieve lower absolute scores in FULL, they have less scope for degradation than the better models.

‘In short, no matter how strong an LLM’s single-turn performance is, we observe large performance degradations in the multi-turn setting.’

The initial test indicates that some models held up better in specific tasks: Command-A on Actions, Claude 3.7 Sonnet, and GPT-4.1 on code; and Gemini 2.5 Pro on Data-to-Text, indicating that multi-turn ability varies by domain. Reasoning models such as o3 and Deepseek-R1 fared no better overall, perhaps because their longer replies introduced more assumptions, which tended to confuse the conversation.

Reliability

The relationship between aptitude and reliability, clear in single-turn simulations, appeared to fall apart under multi-turn conditions. While aptitude declined only modestly, unreliability doubled on average. Models that were stable in full-format prompts, such as GPT-4.1 and Gemini 2.5 Pro, became just as erratic as weaker models like Llama3.1-8B-Instruct or OLMo-2-13B once the instruction was fragmented.

Overview of aptitude and unreliability as shown in a box plot (a), followed by reliability outcomes from experiments with fifteen models (b), and results from the gradual sharding test where instructions were split into one to eight shards (c).

Model responses often varied by as much as 50 points on the same task, even when nothing new was added, suggesting that the drop in performance was not due to a lack of skill, but to the model becoming increasingly unstable across turns.

The paper states†:

‘[Though] better models tend to have slightly higher multi-turn aptitude, all models tend to have similar levels of unreliability. In other words, in multi-turn, underspecified settings, all models we test exhibit very high unreliability, with performance degrading 50 percent points on average between the best and worst simulated run for a fixed instruction.’

To test whether performance degradation was tied to the number of turns, the authors ran a gradual sharding experiment, splitting each instruction into one to eight shards (see right-most column in image above).

As the number of shards increased, unreliability rose steadily, confirming that even minor increases in turn count made models more unstable. Aptitude remained mostly unchanged, reinforcing that the issue lies in consistency, not capability.

Temperature Control

A separate set of experiments tested whether unreliability was simply a byproduct of randomness. To do this, the authors varied the temperature setting of both the assistant and the user simulator across three values: 1.0, 0.5, and 0.0.

In single-turn formats like full and concat, reducing the assistant’s temperature significantly improved reliability, cutting variation by as much as 80 percent; but in the sharded setting, the same intervention had little effect:

Unreliability scores for different combinations of assistant and user temperature across full, concat, and sharded settings, with lower values indicating greater response consistency.

Even when both the assistant and the user were set to zero temperature, unreliability remained high, with GPT-4o showing variation around 30 percent, suggesting that the instability seen in multi-turn conversations is not just stochastic noise, but a structural weakness in how models handle fragmented input.

Implications

The authors write of the implications of their findings at unusual length at the paper’s conclusion, arguing that strong single-turn performance does not guarantee multi-turn reliability, and cautioning against over-relying on fully-specified benchmarks when evaluating real-world readiness (since such benchmarks mask instability in more natural, fragmented interactions).

They also suggest that unreliability is not just a sampling artifact, but a fundamental limitation in how current models process evolving input, and they suggest that this raises concerns for agent frameworks, which depend on sustained reasoning across turns.

Finally, they argue that multi-turn ability should be treated as a core capability of LLMs, not something offloaded to external systems.

The authors note that their results likely underestimate the true scale of the problem, and draw attention to the ideal conditions of the test: the user simulator in their setup had full access to the instruction and could reveal shards in an optimal order, which gave the assistant an unrealistically favorable context (in real-world use, users often supply fragmented or ambiguous prompts without knowing what the model needs to hear next).

Additionally, the assistant was evaluated immediately after each turn, before the full conversation unfolded, preventing later confusion or self-contradiction from being penalized, which would otherwise worsen performance. These choices, while necessary for experimental control, mean that the reliability gaps observed in practice are likely to be even greater than those reported.

They conclude:

‘[We] believe conducted simulations represent a benign testing ground for LLM multi-turn capabilities. Because of the overly simplified conditions of simulation, we believe the degradation observed in experiments is most likely an underestimate of LLM unreliability, and how frequently LLMs get lost in conversation in real-world settings.‘

Conclusion

Anyone who has spent a significant amount of time with an LLM will likely recognize the issues formulated here, from practical experience; and most of us, I imagine, have intuitively abandoned ‘lost’ LLM conversations for fresh ones, in the hope that the LLM may ‘start over’ and cease to obsess about material that came up in a long, winding and increasingly infuriating exchange.

It’s interesting to note that throwing more context at the problem may not necessarily solve it; and indeed, to observe that the paper raises more questions than it provides answers (except in terms of ways to skip around the problem).

* Confusingly, this is unrelated to the conventional meaning of ‘sharding’ in AI.

† Authors’ own bold emphases.

First published Monday, May 12, 2025